How Lander converts 3D world coordinates into 2D screen coordinates

All 3D games have some kind of projection system that takes coordinates from the 3D world and projects them onto the 2D screen. The most common approach is the simple perspective projection, in which we convert 3D coordinates to 2D coordinates by simply dividing the x-coordinate (left-right) and y-coordinate (up-down) by the z-coordinate (into and out of the screen):

screen_x = x / z screen_y = y / z

This is the approach used in both Elite and Aviator, and it's no surprise that it's also used in Lander. In Elite, the projection logic is in the PROJ routine, while in Aviator it's in the ProjectPoint routine. Lander, meanwhile, has two projection routines, one for particles at ProjectParticleOntoScreen, and another for object vertices at ProjectVertexOntoScreen.

At the core of all these routines is simple division by the z-coordinate, giving us a screen coordinate that we can clip appropriately to fit on-screen. For more details of the maths behind this projection, plus lots of information about other types of projection, see the Wikipedia article on perspective projection.

Lander's projection routines do this division using the shift-and-subtract algorithm. This algorithm is similar in concept to the shift-and-add multiplication algorithm that Lander uses instead of MUL instructions, except this algorithm divides instead of multiplying. See the deep dive on Lander's origins on the ARM1 for more about shift-and-add multiplication in Lander, and see this deep dive in my Elite project for a discussion of the division algorithm (albeit for 8-bit computers).

Another interesting point to note is that particles have a stricter set of rules than object vertices around whether they are projected onto the screen. The rules for particles are:

- Projected screen x-coordinate must be in the range 0 to 319

- Projected screen y-coordinate must be in the range 0 to 238

- Projected coordinate must be at less than a 45-degree viewing angle (i.e. within a viewing cone of 45 degrees)

- If the particle's z-coordinate is greater than &80000000, it is deemed too far away to project

The y-coordinate range of 0 to 238 is the screen height (256 pixels) minus the two text lines that are reserved for the score bar at the top of the screen (16 pixels).

So particles must project onto the screen to be drawn, and they must be within a 45-degree viewing cone from the viewer. This means that anything that is too far to the sides will not be projected and will therefore not be drawn.

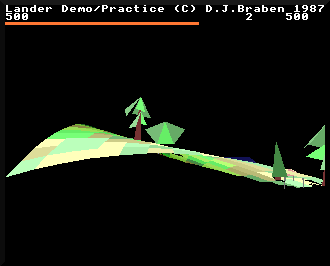

In comparison, object vertices only abide by the last rule - if they are too far away, then they are not projected onto the screen, but otherwise they are projected into 2D coordinates, whatever the result. There is a good reason for this: objects are allowed to be only partially on-screen, as in this rather extreme example:

In this case not only can we see the underneath of the landscape, but part of the tree on the right is off-screen. If we refused to project off-screen coordinates for 3D objects, or restricted them to a particular viewing cone (like we do with particles), then objects would break up strangely as they approached the screen edge. A better solution is to allow projection of all object vertices that are close enough to be visible, and let the DrawTriangle routine take care of clipping its triangles to the edge of the screen (see the deep dive on drawing triangles for more details).

Incidentally, not all 3D games use the perspective projection. Geoff Crammond's epic driving simulation Revs uses pitch and yaw angles instead of the perspective projection; if you want to go down this particular rabbit hole, check out the deep dive article in my Revs project.